Low-cost open source ultrasound-sensing based navigational support for visually impaired

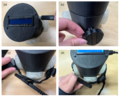

Nineteen million Americans have significant vision loss. Over 70% of these are not employed full-time, and more than a quarter live below the poverty line. Globally, there are 36 million blind people, but less than half use white canes or more costly commercial sensory substitutions. The quality of life for visually impaired people is hampered by the resultant lack of independence. To help alleviate these challenges this study reports on the development of a low-cost (<$24), open-source navigational support system to allow people with the lost vision to navigate, orient themselves in their surroundings and avoid obstacles when moving. The system can be largely made with digitally distributed manufacturing using low-cost 3-D printing/milling. It conveys point-distance information by utilizing the natural active sensing approach and modulates measurements into haptic feedback with various vibration patterns within the distance range of 3 m. The developed system allows people with lost vision to solve the primary tasks of navigation, orientation, and obstacle detection (>20 cm stationary, moving up to 0.5 m/s) to ensure their safety and mobility. Sighted blindfolded participants successfully demonstrated the device for eight primary everyday navigation and guidance tasks including indoor and outdoor navigation and avoiding collisions with other pedestrians.

- Github page with Arduino code

- 3D CAD models if trouble use: https://osf.io/srhjb/

- Customizable bracelet

- Supplementary video

Motivation and project description[edit | edit source]

Nineteen million Americans have significant vision loss. Over 70% are not employed full-time, and more than a quarter live below the poverty line. Globally, there are 36 million blind people, but less than half use white canes or more costly commercial sensory substitutions. The quality of life for visually impaired people is hampered by the resultant lack of independence. To help alleviate this challenge this study reports on the development of a low-cost (<$24), open-source navigational support system to allow people with the lost vision to solve the primary tasks of navigation, orientation, and obstacle detection to ensure their safety and mobility.

The proposed system can be largely digitally distributed manufactured. It conveys point-distance information by utilizing the natural active sensing approach and modulates measurements into haptic feedback with various vibration patterns within the distance range of 3 meters. The developed system allows people with the lost vision to solve the primary tasks of navigation, orientation, and obstacle detection (>20 cm stationary and moving up to 0.5 m/s to ensure their safety and mobility. Sighted blindfolded participants successfully demonstrated the device for eight primary everyday navigation and guidance tasks including indoor and outdoor navigation and avoiding collisions with other pedestrians.

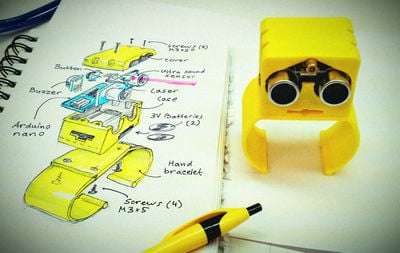

Design process[edit | edit source]

The first version of Blind Person's Assistant was presented as a part of Open Source Appropriate Technology (OSAT) project for Dr. Joshua M. Pearce's course of "Open-source 3D printing" in 2018.

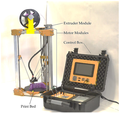

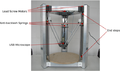

There were several iterations to improve the functionality and ergonomics, using different materials and forms for the design of the case. The electronic part was also significantly revised to maintain effective functionality with minimal dimensions. FFF 3D printing technology provides the best distribution and replication capability, while the flexible filament ensures a reliable assembly without metal parts, easily fits wrist without hurt, tightly fixes the sensor core and absorbs excessive vibration.

The preliminary testing of the device was determined to be a success based on all three participants being able to complete the eight tasks outlined in the methods section. All participants during the experiments noted the effectiveness of the haptic interface, the intuitiveness of learning and adaptation processes, and the usability of the device. The system produces fast response and allows a person to detect objects that are moving. It naturally complements primary sensory perception of a person and allows one to detect moving and static objects.

Future work is needed to further experimentation to obtain more data and perform a comprehensive analysis of the developed system performance. This will also allow us to improve the efficiency of its tactile feedback, since the alternation of patterns of high-frequency vibrations, low-frequency impulses and beats of different periodicity can significantly expand the range of sensory perception.

Conclusions[edit | edit source]

The developed low-cost (<$24 USD), open-source navigational support system allows people with the lost vision to solve the primary tasks of navigation, orientation, and obstacle detection (>20 cm stationary and moving up to 0.5 m/s within the distance range of up to 3 meters) to ensure their safety and mobility. The devices demonstrated intuitive haptic feedback, which becomes easier to use with short practice. It can be largely digitally manufactured as an independent device or as a complementary part to the available means of sensory augmentation (e.g. a white cane). The device operates in similar distance ranges as most of the observed commercial products, and it can be replicated by a person without high technical qualification. Since the prices for available commercial products vary from $100-800 USD, the cost savings ranged from a minimum of 76% to over 97%.

See also[edit | edit source]

Open Source Devices

Health Policy

- Emergence of Home Manufacturing in the Developed World: Return on Investment for Open-Source 3-D Printers

- Life-cycle economic analysis of distributed manufacturing with open-source 3-D printers

- Distributed Manufacturing of Flexible Products- Technical Feasibility and Economic Viability

- Impact of DIY Home Manufacturing with 3D Printing on the Toy and Game Market

- Quantifying the Value of Open Source Hardware Development

- Open-source, self-replicating 3-D printer factory for small-business manufacturing

- Distributed manufacturing with 3-D printing: a case study of recreational vehicle solar photovoltaic mounting systems

- Global value chains from a 3D printing perspective

- Economic Impact of DIY Home Manufacturing of Consumer Products with Low-cost 3D Printing from Free and Open Source Designs

- Leveraging Open Source Development Value to Increase Freedom of Movement of Highly Qualified Personnel

Literature[edit | edit source]

- World Health Organization, https://www.who.int/news-room/fact-sheets/detail/blindness-and-visual-impairment, (accessed on 06/28/2019).

- Velazquez R. (2010) Wearable Assistive Devices for the Blind. In: Lay-Ekuakille A., Mukhopadhyay S.C. (eds) Wearable and Autonomous Biomedical Devices and Systems for Smart Environment. Lecture Notes in Electrical Engineering, vol 75. Springer, Berlin, Heidelberg.

- Population Reference Bureau. Fact Sheet: Aging in the United States. https://www.prb.org/aging-unitedstates-fact-sheet/ (accessed on 06/28/2019).

- Economic Policy Institute. https://www.epi.org/publication/economic-security-elderly-americans-risk/ (accessed on 06/28/2019).

- American Foundation for the Blind, http://www.afb.org (accessed on 06/28/2019).

- National Federation of the Blind, https://www.nfb.org/resources/blindness-statistics (accessed on 06/28/2019).

- Quinones, P.A., Greene, T., Yang, R., & Newman, M.W. (2011). Supporting visually impaired navigation: A needs-finding study. ACM Proc CHI, pp. 1645-1650.

- Gold, D., & Simson, H. (2005). Identifying the needs of people in Canada who are blind or visually impaired: Preliminary results of a nation-wide study. International Congress Series, 1282, 139-142. Elsevier.

- Christy, B., & Nirmalan, P.K. (2006). Acceptance of the long Cane by persons who are blind in South India. JVIB, 100(2), 115.

- Pereira A., Nunes N., Vieira D., Costa N., Fernandes H., Barroso J.. Blind Guide: An Ultrasound Sensor-based Body Area Network for Guiding Blind People. Procedia Computer Science, Volume 67, 2015, Pages 403-408.

- Maidenbaum, S., Hanassy, S., Abboud, S., Buchs, G., Chebat, D.-R., Levy-Tzedek, S., Amedi, A. The "EyeCane", a new electronic travel aid for the blind: Technology, behavior & swift learning. Restorative Neurology and Neuroscience, vol. 32, no. 6, pp. 813-824, 2014.

- UltraCane (£635.00), https://www.ultracane.com/ultracanecat/ultracane, https://www.ultracane.com/about_the_ultracane (accessed on 06/28/2019).

- Miniguide Mobility Aid ($499.00), https://www.independentliving.com/product/Miniguide-Mobility-Aid/mobility-aids (accessed on 06/28/2019).

- LS&S 541035 Sunu Band Mobility Guide and Smart Watch ($373.75), https://www.devinemedical.com/541035-Sunu-Band-Mobility-Guide-and-Smart-Watch-p/lss-541035.htm?gclid=EAIaIQobChMI8fiNwPzj4gIVGMNkCh3MyAuvEAYYAiABEgJQQvD_BwE (accessed on 06/28/2019).

- BuzzClip Mobility Guide ($249.00), https://www.independentliving.com/product/BuzzClip-Mobility-Guide-2nd-Generation/mobility-aids (accessed on 06/28/2019).

- iGlasses Ultrasonic Mobility Aid ($99.95), https://www.independentliving.com/product/iGlasses-Ultrasonic-Mobility-Aid-Clear-Lens/mobility-aids?gclid=EAIaIQobChMIn87P953k4gIVh8DACh39dwCxEAYYASABEgKWZfD_BwE (accessed on 06/28/2019).

- Caretec. Ray - the handy mobility aid. http://web.archive.org/web/20181211171833/http://www.caretec.at/Mobility.148.0.html?&cHash=a82f48fd87&detail=3131

- Gibb, A. Building Open Source Hardware: DIY Manufacturing for Hackers and Makers; Pearson Education, 2014; ISBN 978-0-321-90604-5.

- Costa, E.T. da; Mora, M.F.; Willis, P.A.; Lago, C.L. do; Jiao, H.; Garcia, C.D. Getting started with open-hardware: Development and control of microfluidic devices. ELECTROPHORESIS 2014, 35, 2370–2377.

- Ackerman, J.R. Toward Open Source Hardware. U. Dayton L. Rev. 2008, 34, 183–222.

- Blikstein, P. Digital fabrication and 'making'in education: The democratization of invention. FabLabs: Of machines, makers and inventors 2013, 4, 1-21.

- Gershenfeld, N. 2012. How to Make Almost Anything: The Digital Fabrication Revolution. Available from internet: http://cba.mit.edu/docs/papers/12.09.FA.pdf (accessed on 06/28/2019).

- Sells, E., Bailard, S., Smith, Z., Bowyer, A., Olliver, V. RepRap: The Replicating Rapid Prototyper-Maximizing Customizability by Breeding the Means of Production. Proceedings in the World Conference on Mass Customization and Personalization, 2010. Cambridge, MA, USA, 7-10 October 2007.

- Jones, R., Haufe, P., Sells, E., Iravani, P., Olliver, V., Palmer, C., Bowyer, A. RepRap-the Replicating Rapid Prototyper. Robotica 2011, 29 (01): 177–91.

- Bowyer, A. 3D Printing and Humanity's First Imperfect Replicator. 3D Printing and Additive Manufacturing 2014, 1 (1): 4–5.

- Rundle, G. A Revolution in the Making; Simon and Schuster: New York, NY, USA, 2014; ISBN 978-1-922213-48-8.

- Kietzmann, J., Pitt, L., Berthon, P. Disruptions, decisions, and destinations: Enter the age of 3-D printing and additive manufacturing. Business Horizons 2015, 58, 209–215.

- Lipson, H., Kurman, M. Fabricated: The New World of 3D Printing; John Wiley & Sons, 2013; ISBN 978-1-118-41694-5.

- Attaran, M. The rise of 3-D printing: The advantages of additive manufacturing over traditional manufacturing. Business Horizons 2017, 60, 677–688.

- Pearce, J.M. Building Research Equipment with Free, Open-Source Hardware. Science 2012, 337, 1303–1304.

- Pearce, J. Open-source lab: how to build your own hardware and reduce research costs; 2013; ISBN 978-0-12-410462-4.

- Baden, T., Chagas, A.M., Gage, G., Marzullo, T., Prieto-Godino, L.L., Euler, T. Open Labware: 3-D Printing Your Own Lab Equipment. PLOS Biology 2015, 13, e1002086, doi:10.1371/journal.pbio.1002086

- Coakley, M., Hurt, D.E. 3D Printing in the Laboratory: Maximize Time and Funds with Customized and Open-Source Labware. J Lab Autom. 2016, 21, 489–495.

- Costa, E.T. da; Mora, M.F., Willis, P.A., Lago, C.L., Jiao, H., Garcia, C.D. Getting started with open-hardware: Development and control of microfluidic devices. ELECTROPHORESIS 2014, 35, 2370–2377.

- Zhang, C., Wijnen, B., Pearce, J.M. Open-Source 3-D Platform for Low-Cost Scientific Instrument Ecosystem. J Lab Autom. 2016, 21, 517–525.

- Wittbrodt, B., Laureto, J., Tymrak, B., Pearce, J. Distributed Manufacturing with 3-D Printing: A Case Study of Recreational Vehicle Solar Photovoltaic Mounting Systems. Journal of Frugal Innovation 2015, 1 (1): 1-7.

- Gwamuri, J., Wittbrodt, B., Anzalone, N., Pearce, J. Reversing the Trend of Large Scale and Centralization in Manufacturing: The Case of Distributed Manufacturing of Customizable 3-D-Printable Self-Adjustable Glasses. Chall. Sustain. 2014, 2, 30–40.

- Petersen, E.E., Pearce, J. Emergence of Home Manufacturing in the Developed World: Return on Investment for Open-Source 3-D Printers. Technologies 2017, 5, 7.

- Petersen, E.E., Kidd, R.W.; Pearce, J.M. Impact of DIY Home Manufacturing with 3D Printing on the Toy and Game Market. Technologies 2017, 5, 45.

- Woern, A.L., Pearce, J.M. Distributed Manufacturing of Flexible Products: Technical Feasibility and Economic Viability. Technologies 2017, 5, 71.

- Smith, P. Commons people: Additive manufacturing enabled collaborative commons production. In Proceedings of the 15th RDPM Conference, Loughborough, UK, 26–27 April 2015.

- Gallup, N., Bow, J., & Pearce, J. (2018). Economic Potential for Distributed Manufacturing of Adaptive Aids for Arthritis Patients in the US. Geriatrics, 3(4), 89.

- Hietanen, I., Heikkinen, I.T.S., Savin, H., Pearce, J.M. Approaches to open source 3-D printable probe positioners and micromanipulators for probe stations. HardwareX 2018, 4, e00042.

- Sule, S. S., Petsiuk, A. L., & Pearce, J. M. (2019). Open Source Completely 3-D Printable Centrifuge. Instruments, 3(2), 30.

- Oberloier, S. and Pearce, J.M. Belt-Driven Open Source Circuit Mill Using Low-Cost 3-D Printer Components, Inventions 2018, 3(3), 64.

- Elmannai W., Elleithy K. Sensor-Based Assistive Devices for Visually-Impaired People: Current Status, Challenges, and Future Directions. Sensors (Basel). 2017 Mar 10;17(3). pii: E565.

- Wahab, A., Helmy, M., Talib, A.A., Kadir, H.A., Johari, A., Noraziah, A., Sidek, R.M., Mutalib, A.A. Smart Cane: Assistive Cane for Visually-impaired People. IJCSI International Journal of Computer Science Issues, Vol. 8, Issue 4, No 2, July 2011.

- Fonseca, R. Electronic long cane for locomotion improving on visual impaired people: A case study. In Proceedings of the 2011 Pan American Health Care Exchanges (PAHCE), Rio de Janeiro, Brazil, 28 March–1 April 2011.

- Amedi, A., & Hanassy, S., (2012). Infra Red based devices for guiding blind and visually impaired persons, US Patent 2,012,090,114.

- Bharambe, S., Thakker, R., Patil, H., Bhurchandi, K.M. Substitute Eyes for Blind with Navigator Using Android. In Proceedings of the India Educators Conference (TIIEC), Bangalore, India, 4–6 April 2013; pp. 38–43.

- Kumar, K., Champaty, B., Uvanesh, K., Chachan, R., Pal, K., Anis, A. Development of an ultrasonic cane as a navigation aid for the blind people. In Proceedings of the 2014 International Conference on Control, Instrumentation, Communication and Computational Technologies (ICCICCT), Kanyakumari District, India, 10–11 July 2014.

- Yi, Y., Dong, L. A design of blind-guide crutch based on multi-sensors. In Proceedings of the 2015 12th International Conference on Fuzzy Systems and Knowledge Discovery (FSKD), Zhangjiajie, China, 15–17 August 2015.

- Aymaz, Ş., Çavdar, T. Ultrasonic Assistive Headset for visually impaired people. In Proceedings of the 2016 39th International Conference on Telecommunications and Signal Processing (TSP), Vienna, Austria, 27–29 June 2016.

- Agarwal R., Ladha N., Agarwal M. et al. Low cost ultrasonic smart glasses for blind. 2017 8th IEEE Annual Information Technology, Electronics and Mobile Communication Conference (IEMCON).

- Landa-Hernandez, A., Bayro-Corrochano, E. Cognitive guidance system for the blind. In Proceedings of the IEEE World Automation Congress (WAC), Puerto Vallarta, Mexico, 24–28 June 2012.

- Dunai, L., Garcia, B.D., Lengua, I., Peris-Fajarnes, G. 3D CMOS sensor based acoustic object detection and navigation system for blind people. In Proceedings of the 38th Annual Conference on IEEE Industrial Electronics Society (IECON 2012), Montreal, QC, Canada, 25–28 October 2012.

- Fradinho Oliveira, J. The path force feedback belt. In Proceedings of the 2013 8th International Conference on Information Technology in Asia (CITA), Kuching, Malaysia, 1–4 July 2013.

- Saputra, M.R.U., Santosa, P.I. Obstacle Avoidance for Visually Impaired Using Auto-Adaptive Thresholding on Kinect's Depth Image. In Proceedings of the IEEE 14th International Conference on Scalable Computing and Communications and Its Associated Workshops (UTC-ATC-ScalCom), Bali, Indonesia, 9–12 December 2014.

- Aladren, A., Lopez-Nicolas, G., Puig, L., Guerrero, J.J. Navigation Assistance for the Visually Impaired Using RGB-D Sensor with Range Expansion. IEEE Systems Journal 2016, 10, 922–932.

- Mocanu, B., Tapu, R., Zaharia, T. When. Ultrasonic Sensors and Computer Vision Join Forces for Efficient Obstacle Detection and Recognition. Sensors 2016, 16, 1807.

- Prudhvi, B.R., Bagani, R. Silicon eyes: GPS-GSM based navigation assistant for visually impaired using capacitive touch braille keypad and smart SMS facility. In Proceedings of the 2013 World Congress on Computer and Information Technology (WCCIT), Sousse, Tunisia, 22–24 June 2013.

- Sahba F., Sahba A., Sahba R. Helping Blind People in Their Meeting Locations to Find Each Other Using RFID Technology. International Journal of Computer Science and Information Security (IJCSIS), Vol. 16, No. 12, December 2018.

- Blasch, B.B., Wiener, W.R., Welsh, R.L. Foundations of Orientation and Mobility, 2nd ed.; AFB Press: New York, NY, USA, 1997.

- HC-SR04 datasheet, https://github.com/sparkfun/HC-SR04_UltrasonicSensor (accessed on 06/28/2019).

- Vibration motor datasheet https://www.precisionmicrodrives.com/wp-content/uploads/2016/04/310-101-datasheet.pdf (accessed on 6/28/2019).

- Horev, G., Saig, A., Knutsen, P.M., Pietr, M., Yu, C., & Ahissar, E. (2011). Motor–sensory convergence in object localization: A comparative study in rats and humans. Phil Trans R Soc B: Biological Sciences, 366(1581), 3070-3076.

- Lenay, C., Gapenne, O., & Hanneton, S. (2003). Sensory substitution: Limits and perspectives. Touching for Knowing, 275-292.

- MOST-Ultrasound-based-Navigational-Support https://github.com/apetsiuk/MOST-Ultrasound-based-Navigational-Support (accessed on 6/28/2019).

- Ultrasound-sensing based navigational support for visually impaired https://www.thingiverse.com/thing:3717730 (accessed on 6/28/2019).

- Arduino IDE https://www.arduino.cc/en/Main/Software (accessed on 06/28/2019).

- NIST/SEMATECH e-Handbook of Statistical Methods, https://www.itl.nist.gov/div898/handbook/pmc/section4/pmc431.htm (accessed on 06/28/2019).

- Savindu H.P, Iroshan K.A., C.D. Panangala, Perera W.L.D.W.P., De Silva A.C. BrailleBand: Blind support haptic wearable band for communication using braille language. IEEE International Conference on Systems, Man, and Cybernetics (SMC), 2017.

- Sekuler R., Blake R.. Perception. McGraw-Hill, 2002.

- Nau, A.C., Pintar, C., Fisher, C., Jeong, J.H., & Jeong, K. (2014), A standardized obstacle course for assessment of visual function in ultra low vision and artificial vision. J Vis Exp, 11(84).